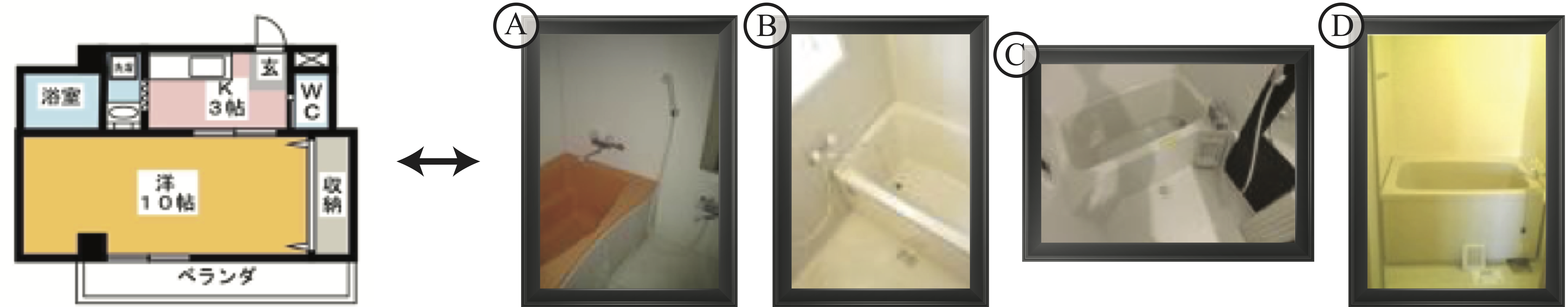

Figure 1: Which of the four photographs corresponds to the left floorplan? The task requires careful and sophisticated human reasoning, unlike many other computer vision problems that only require instant human attention. This paper explores the potential of deep-neural networks in solving such a problem. The answer is C.

Abstract

Inference of correspondences between images from different modalities is an extremely important perceptual ability that enables humans to understand and recognize cross-modal concepts. In this paper, we consider an instance of this problem that involves matching photographs of building interiors with their corresponding floorplan. This is a particularly challenging problem because a floorplan, as a stylized architectural drawing, is very different in appearance from a color photograph. Furthermore, individual photographs by themselves depict only a part of a floorplan (e.g., kitchen, bathroom, and living room). We propose the use of a number of different neural network architectures for this task, which are trained and evaluated on a novel large-scale dataset of 5 million floorplan images and 80 million associated photographs. Experimental evaluation reveals that our neural network architectures are able to identify visual cues that result in reliable matches across these two quite different modalities. In fact, the trained networks are able to even outperform human subjects in several challenging image matching problems. Our result implies that neural networks are effective at perceptual tasks that require long periods of reasoning even for humans to solve.